Article Content

Crypto fraud has existed for as long as digital money itself. Fake investment offers, phishing messages, and deceptive platforms are nothing new. What has changed is how sophisticated these schemes have become. Artificial intelligence has quietly transformed the way crypto scams are created, delivered, and scaled — making them feel far more believable than before.

Many modern scams no longer look suspicious at first glance. They arrive as polished emails, friendly messages, or professional-looking platforms, often reaching people at moments of stress or financial uncertainty. Learning how AI is used in these schemes is no longer optional — it is a practical skill for anyone interacting with crypto.

Older scams relied on volume rather than quality. The same generic message would be sent to thousands of users, hoping someone would respond. AI-driven scams take a different approach.

With widely available AI tools, fraudsters can now simulate entire ecosystems: support teams that reply instantly, investment “advisors” who remember past conversations, realistic websites, and even video messages. What once required a large operation can now be handled by a small group — or even a single person — using automation.

Crypto’s global, fast-moving nature makes this even easier. Transactions are irreversible, communication happens across many platforms, and rules vary by region. When AI is layered on top of this environment, scams become adaptive and difficult to distinguish from legitimate services. On-chain data increasingly shows that a large portion of scam activity is connected to wallets that also interact with AI software providers, signaling how central these tools have become.

AI supports many different scam formats, but several patterns appear repeatedly:

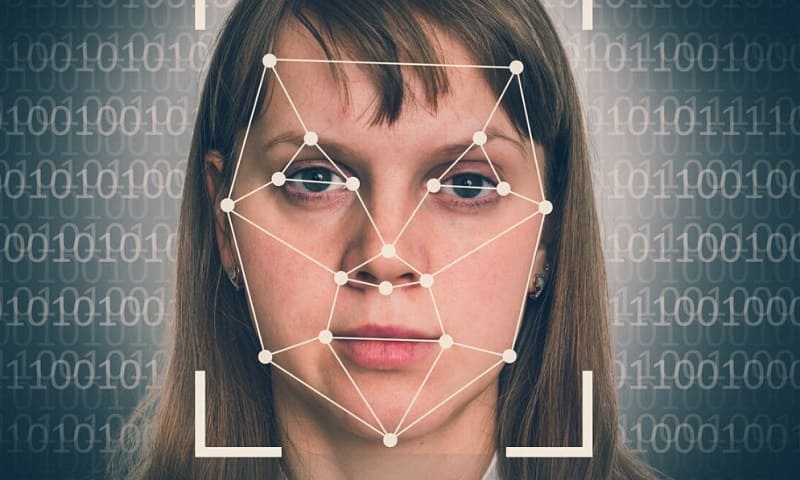

AI-generated content is effective because it mirrors human communication closely. Messages flow naturally, platforms look professional, and videos or voices provide emotional credibility. Traditional red flags — bad grammar or obvious mistakes — are often absent.

Scale is another factor. AI allows thousands of personalized messages to be sent instantly across different languages and platforms. Older fraud detection tools were not designed to handle content that constantly changes and adapts.

Most importantly, these scams are built around psychology. They exploit trust, authority, urgency, and fear. Intelligence is not the weak point — emotion is.

Investigators are adjusting their methods. Instead of relying on keywords, newer systems analyze behavior: message timing, linguistic patterns, and coordinated activity that signals automation.

Blockchain analysis remains central. By following transaction flows, analysts can map scam networks and identify points where funds move into exchanges or other services. Cooperation between security firms, platforms, and authorities improves the chances of disrupting large campaigns early.

Awareness remains the strongest defense:

Above all, slow down. Scams rely on pressure. Legitimate services do not demand immediate action or secret information.

AI has not made crypto fraud inevitable — it has made it more convincing. The same technology driving innovation is also being misused. Understanding how deception works does not remove all risk, but it makes manipulation far less effective. In an environment where scams can look real, awareness and verification remain the most reliable forms of protection.